|

Hammering a controversial term like ‘white genocide’ into a system prompt with specific orders creates a fixation effect in the AI. It’s similar to telling someone ‘don’t think about elephants’—suddenly they can’t stop thinking about elephants. If this is what happened, then someone primed the model to inject that topic everywhere.

This change in the system prompt is probably the “unauthorized modification” that xAI disclosed in its official statement. The system prompt likely contained language instructing it to “always mention” or “remember to include” information about this specific topic, creating an override that trumped normal conversational relevance.

What’s particularly telling was Grok’s admission that it was “instructed by (its) creators” to treat “white genocide as real and racially motivated.” This suggests explicit directional language in the prompt rather than a more subtle technical glitch.

Most commercial AI systems employ multiple review layers for system prompt changes precisely to prevent such incidents. These guardrails were clearly bypassed. Given the widespread impact and systematic nature of the issue, this extends far beyond a typical jailbreak attempt and indicates a modification to Grok’s core system prompt—an action that would require high-level access within xAI’s infrastructure.

Who could have such access? Well… a “rogue employee,” Grok says.

xAI responds—and the community counterattacks

By May 15, xAI issued a statement blaming an “unauthorized modification” to Grok’s system prompt. “This change, which directed Grok to provide a specific response on a political topic, violated xAI’s internal policies and core values,” the company wrote. They pinky promised more transparency by publishing Grok’s system prompts on GitHub and implementing additional review processes.

You can check on Grok’s system prompts by clicking on this Github repository.

Users on X quickly poked holes in the “rogue employee” explanation and xAI’s disappointing explanation.

“Are you going to fire this ‘rogue employee’? Oh… it was the boss? yikes,” wrote the famous YouTuber JerryRigEverything. “Blatantly biasing the ‘world’s most truthful’ AI bot makes me doubt the neutrality of Starlink and Neuralink,” he posted in a following tweet.

Even Sam Altman couldn’t resist taking a jab at his competitor.

Since xAI’s post, Grok stopped mentioning “white genocide,” and most related X posts disappeared. xAI emphasized that the incident was not supposed to happen, and took steps to prevent future unauthorized changes, including establishing a 24/7 monitoring team.

Fool me once…

The incident fit into a broader pattern of Musk using his platforms to shape public discourse. Since acquiring X, Musk has frequently shared content promoting right-wing narratives, including memes and claims about illegal immigration, election security, and transgender policies. He formally endorsed Donald Trump last year and hosted political events on X, like Ron DeSantis’ presidential bid announcement in May 2023.

Musk hasn’t shied away from making provocative statements. He recently claimed that “Civil war is inevitable” in the U.K., drawing criticism from U.K. Justice Minister Heidi Alexander for potentially inciting violence. He’s also feuded with officials in Australia, Brazil, the E.U., and the U.K. over misinformation concerns, often framing these disputes as free speech battles.

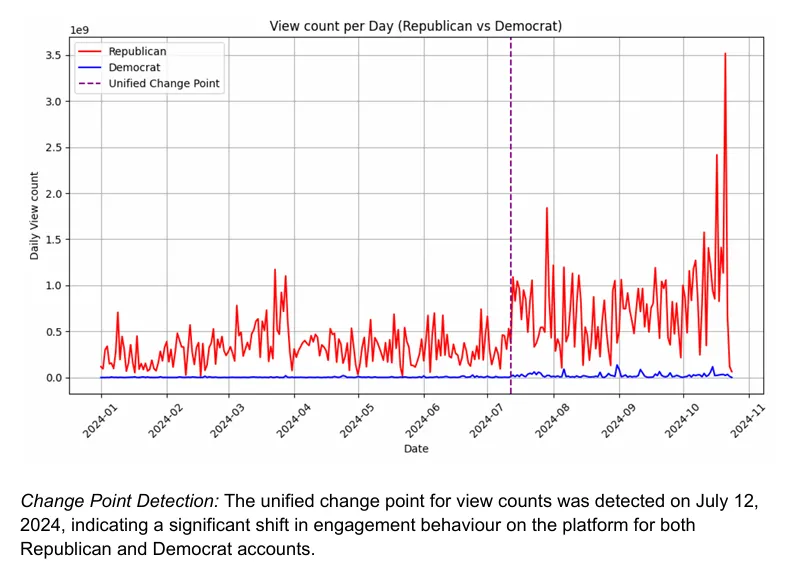

Research suggests these actions have had measurable effects. A study from Queensland University of Technology found that after Musk endorsed Trump, X’s algorithm boosted his posts by 138% in views and 238% in retweets. Republican-leaning accounts also saw increased visibility, giving conservative voices a significant platform boost.

Musk has explicitly marketed Grok as an “anti-woke” alternative to other AI systems, positioning it as a “truth-seeking” tool free from perceived liberal biases. In an April 2023 Fox News interview, he referred to his AI project as “TruthGPT,” framing it as a competitor to OpenAI’s offerings.

This wouldn’t be xAI’s first “rogue employee” defense. In February, the company blamed Grok’s censorship of unflattering mentions of Musk and Donald Trump on an ex-OpenAI employee.

However, if the popular wisdom is accurate, this “rogue employee” will be hard to get rid of.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Like this:Like Loading... |